In the interim, several blogs, the winediarist in particular, have held long and fairly tedious chains of comments about the study by people who have clearly not read it and do not understand statistics.

Here's my problem: it's a small bore student paper that sticks closely to it's data, applies the wrong test, but most importantly, doesn't appreciate the role of pricing in an economic system. You see, price is a measure of quality along with all the measuring systems in the paper, especially in terms of 2005 Bordeaux data set, where the prices have been reacting to scores and consumer demand for about four years. If you want to to measure point systems against release prices, well... that's another story... one that has nothing to do with consumer attitudes, but it might be interesting.

I urge anyone interested in the subject to read

Also a good read is

The field of wine pricing is rich, really rich. There's cult wines that price according to perceived scarcity. Grocery stores pricing is very sensitive to sales figures. The three-tier system of distribution in this country makes price sensitive discounting an interesting subject.

The important thing to remember, however, is that price and ratings are both measuring the same thing - quality. In other words, where the market is at equilibrium, better wines will cost more and command higher prices. Short term disequilibrium will occur where a hidden gem hasn't been discovered yet or an old vintage is discounted to make room in the market for a new release, or a loss leader is advertised to get people into a store. But in general, the better the wine is, the more it will cost. And the more ratings points a wine garners from critics, the more it will cost. Price and rating are both telling the same story (they are both y variables in a study with no x), so to compare them doesn't bring much new knowledge to the table.

I have some specific statistical objections in terms of the study's data that go along with this phenomena that we call multicollinearity. For example, CT scores suffer from bias because the people who shelled out lots of money are less likely to rate a wine poorly than those who pay less. Tanzer, at least in the hour I spent exploring his website, does not claim to taste blind and so his score will be biased at least in part by reputation, which is also collinear with price. Last, the Parker data is mixed with barrel samples that aren't blind and post-release scores that are. But one cannot draw any conclusions from this study because of the procedural mistakes. It would be interesting to go back.

I'm actually writing a whole article on this based on Benjamin Lewin, MW writings in his two books and Tong magazine. Stay tuned!

I'm actually writing a whole article on this based on Benjamin Lewin, MW writings in his two books and Tong magazine. Stay tuned!

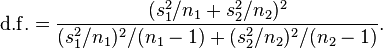

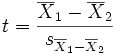

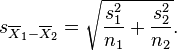

is not a pooled variance. For use in significance testing, the distribution of the test statistic is approximated as being an ordinary

is not a pooled variance. For use in significance testing, the distribution of the test statistic is approximated as being an ordinary